Recently, Wyvern announced the launch of its Open Data Initiative, offering a range of high-resolution visible light and near-infrared (VNIR) hyperspectral images for free. The images of its DragonetT-001 satellite cover 23 bands within the range of 500 to 800 nanometers, and the spatial resolution of the subsatellite point is as high as 5.3 meters. Hyperspectral imaging is one of the most promising technologies driving the development of earth observation in numerous industries such as agriculture, forestry, mining, oceanography and security. This imaging method has significant advantages over traditional multispectral imaging because it can detect subtle biochemical and biophysical changes in land cover.

Today, we will use Metaspectral's unique cloud Fusion platform to explore one of the photos taken by Wyvern in the Suez Canal of Egypt. Fusion is specifically designed for processing massive amounts of data from hyperspectral images and uses the most advanced machine learning and deep learning algorithms for processing. Let's delve into it!

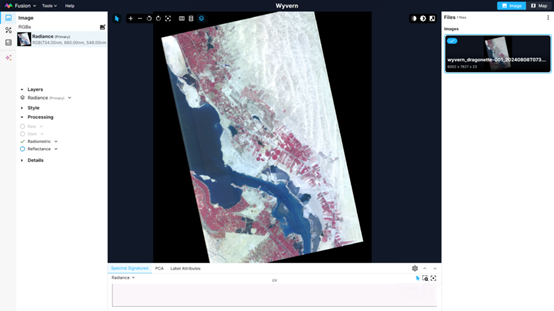

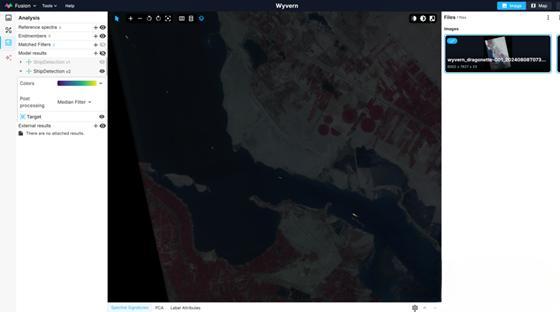

Fusion runs entirely in the browser and can be uploaded to the platform simply by dragging and dropping images. This image is measured in emissivity (W/sr⋅m²⋅µm), with a resolution close to 50 million pixels. It shows a part of the Great Bitter Lake, where several ships are sailing.

Fusion explorer displays the complete image

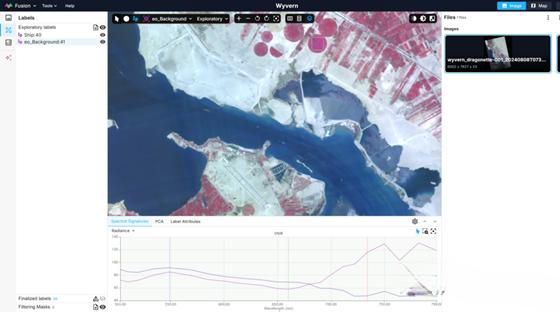

After marking some ships and crops, we can see that there are obvious differences between the two spectral features. In fact, the spectral characteristics of crops show a characteristic increase in the red-edge region (600 to 800 nanometers), while the spectral characteristics of ships show the opposite trend.

Integrate browser display selection and spectrum

Build datasets and models

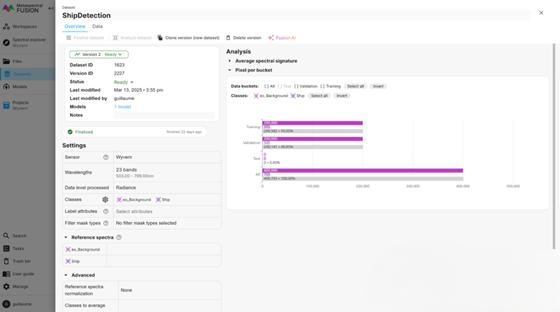

After exploring the images, the next step is to use deep learning to build an object detection model to identify ships sailing in the Suez Canal based on spectral features. This can be accomplished in just two steps in Fusion. First, we constructed a dataset using the marked regions in the images. Since we focus on object detection, only two types of labels have been created: ship and background (any non-ship objects). It should be noted that the dataset can also be directly created from a single reference spectrum in the spectral library. In this case, the dataset will be created from the synthetic pixels generated by the reference spectrum.

As shown below, the dataset indicates that the training set and the validation set use 562 and 141 ship pixels respectively, while both use 200,000 background pixels.

Fusion dataset view

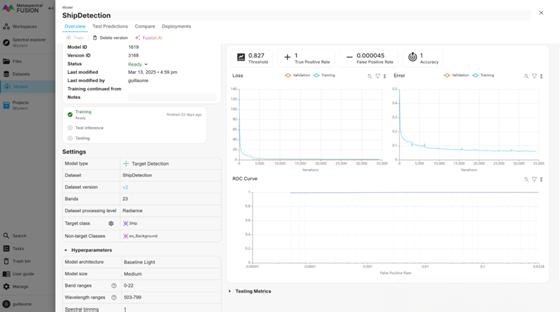

Next, based on the previously created dataset, we train a custom deep learning model for ship detection. As shown below, the model correctly identified all the ship pixels, with a false alarm rate of only 0.000045.

The integration demonstrated the performance of the ship inspection model

Visualization result

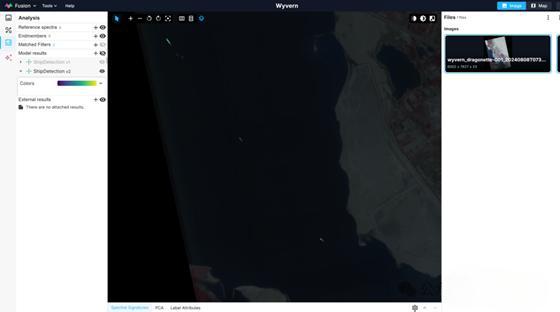

Back to the Fusion Explorer view, you can see the visualization effect of the model inference. The result is superimposed on the original image and has a slight transparency. Nowadays, many ships sailing in the canal, including the smallest ones that were not marked at first, can be clearly identified through the yellowish-green tones.

| → |

|

| Fusion exploration view, in which the model inference results are superimposed on the original image |

| Fusion Explorer view, where the model inference results are superimposed on the original image (enlarged) |

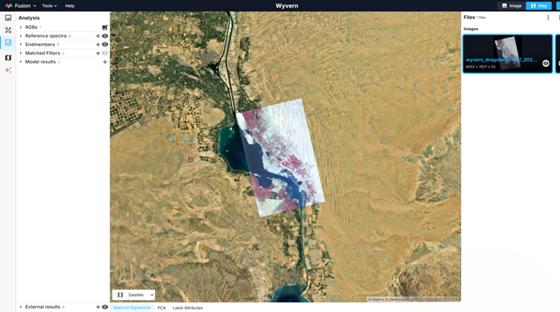

Of course, for images with geographic references, the same images and results can also be displayed on the base map.

The Fusion platform integrates a vast amount of cloud computing resources and possesses unparalleled capabilities to analyze large datasets containing hundreds of hyperspectral images in an intuitive and scalable manner. This platform offers classification, regression, object detection and spectral decomposition models. Fusion can efficiently analyze massive amounts of data and provide practical insights from hyperspectral images. It is highly suitable for your remote sensing and industrial hyperspectral requirements.

Related products: please click here